Mapping Rent Control In Jersey City Part 1

Jersey City is one of the most expensive cities in the country for renters. On paper, it has a strong rent control regime: any building with 5+ units is rent controlled unless it fits into one of three narrow exemptions. But in practice, most tenants don’t know whether their building is rent controlled, and rent control is seldom enforced. For tenants, figuring out whether their building is rent controlled can be extremely time and labor intensive. This post documents preliminary steps towards a comprehensive, city-wide audit to determine which buildings are legally rent controlled.

State & Local Rent Control Rules

In 1987, New Jersey authorized its municipalities to pass their own rent control ordinances in the Newly Constructed Multiple Dwellings Law (NCMD). N.J.S.A. 2A:42-84.1, et seq. However, in passing NCMD, the state legislature determined it “necessary for the public welfare to increase the supply of newly constructed rental housing.” N.J.S.A. 2A:42-84.5(b). To avoid deterring new construction, the legislature elected to “exempt new construction of rental []units from municipal rent control.” Id. Accordingly, the NCMD states that any residential properties constructed after June 25, 1987 may be exempt from municipal rent control for either (a) 30 years from the completion of construction, or (b) the period of amortization of any initial mortgage loan, whichever is less. N.J.S.A. 2A:42-84.2(a)-(b). To be exempt, a new building must file “a written statement of the owner's claim of exemption” with the municipal construction official at least 30 days prior to the issuance of a certificate of occupancy. N.J.S.A. 2A:42-84.4. New Jersey courts have affirmed the NCMD requirements. See Willow Ridge Apartments, LLC v. Union City Rent Stabilization Bd., No. A-3578-20 (N.J. Super. App. Div. 2022) (holding newly constructed apartment building was subject to rent control because property owner could not prove compliant filing with construction official).

Following NCMD, Jersey City elected to pass an ordinance establishing local rent control for residential buildings. Jersey City Mun. Ord. § 260 et seq. Rent controlled buildings can only increase rent by 4% or the change in CPI, and any rent increase “in excess of that” is void. Id. § 260-2(D), 3(A). As it must, Section 260 incorporates the exemption process from NCMD, for buildings constructed post-1987 that timely file a notice of exemption. Id. § 260-6(C). And Section 260 further exempts buildings from rent control where: (1) it has four or fewer units, (2) it is newly-constructed with 25+ units and located within a council-approved redevelopment area, or (3) it is a low rent public housing development. Id. § 260-1(A)(1)-(4).

Taking NCMD and Section 260 together, rent control applies to all five+ unit residential buildings in Jersey City, unless the building:

was constructed after 1987 and timely filed a compliant exemption; or

is new 25+ unit development that is located in a redevelopment area; or

is a low rent public housing development.

Mapping Rent Control

Given the legal framework, to map buildings subject to rent control, we need the following information:

Shapefiles for buildings and parcels;

Whether each building filed an exemption filing ([filing]);

The year each building was constructed ([year]);

The number of units for each building ([units]);

Whether the building is located in a redevelopment area ([redev]) and, if so, what year the area was council-approved ([redev-year]); and

Whether the building is a low rent public housing development ([JCHA]).

Once I have all of the above information, I can tag buildings as rent controlled by default where: [units] > 4. Then, I can tag buildings as exempt where: ([year] > 1987 AND [exemption filing] = true) OR [JCHA] = true OR ([redev] = true AND [year] >= [redev-year] AND [units] > 25).

I started by downloading the shapes of New Jersey municipalities from the NJ Geographic Information Network and extracting the polygon for Jersey City—not strictly necessary, but might be nice to have for the final maps. Then I got into it:

Step 1: Map Parcels

First, I downloaded parcel shapes data from JC Open Data and mapped them (below). Unfortunately, that dataset appears to have last been updated in 2018—not great.

To get more recent parcel shapes, I checked the county GIS site, which does have parcel shapes updated as of August 2025—but it doesn’t have easily downloadable shapefiles. To get this data in editable form, I found the GIS REST service URL for the Hudson County parcels layer in the metadata. Then, I used the QGIS “Add ArcGIS Feature Server Layer” feature to connect this service layer and visualize it. In an ideal world, I would have just been able to select all parcels within Jersey City and export this selection directly as a local shapefile. However, This REST layer had a max record count of 2000 for queries. So I used a python script to autoquery records in sets of 2000 and export a shapefile, which I then added as a layer. I then edited the layer to delete all features in the county except those in Jersey City. I would give you an image of that, but it looks is exactly the same as the 2018 data.

After all that, I bulk downloaded current assessment data for Jersey City from the county Assessment Records Search tool in a spreadsheet, and merged that data onto the parcel shapes by block, lot, and qual. Unlike the parcel shapes data, the assessor data has important variables like addresses, owner information, assessment information, and critically, building age and property class information (a proxy for number of units)—these variables will become important below. A whole lot of work for a pretty boring map to start:

Step 2: Map Buildings

Next, I imported OSM buildings data by using the QuickOSM plugin. I plan to use this data to identify shapes for buildings that are associated with exemption filings. This isn’t strictly necessary—I could do everything by parcels, which contain basically all of my variables of interest. But 1) I am an aesthete and buildings are prettier; 2) I worked hard to make this data way back read—read about here; and 3) at the end of the day, tenants are going to intuitively think about whether their building is rent controlled, not the parcel it’s on.

Step 3: Request Rent Control Exemption Filings and Map Them

This step is the doozy. In late 2022, I submitted an Open Public Records Act (OPRA) requesting every single rent control exemption filing in the city starting in 1987. You can see that request here. In addition to exemption filings, I requested related documents and communications. Over several months, after repeatedly clarifying that I sought all such records, I received responsive documents. In total, there were only responsive records associated with 128 properties. I saved those files in a Google Drive folder and prepared a spreadsheet summarizing the filings. These properties are not automatically exempt from rent control; with a cursory review, it’s clear that many of these properties do not have compliant filings. But regardless, for tenants wondering whether their building with 5+ units might be exempt from rent control, this list is a solid starting place. Note that I submitted an updated OPRA request in summer 2025 to identify any filings post-dating 2022, and that request is in progress. With my spreadsheet, I reverse-geocoded the addresses for these properties. Then I imported lat/long information into QGIS to create a point layer of exemption filings.

Then, I wanted to identify the specific building footprints associated with these addresses, so I did a spatial join. To my surprise, based on a manual check, this correctly identified buildings in about 60% of cases. The other 40% required manually looking up the address at issue, and then consulting google maps, satellite imagery, and the block/lot number in parcel data. In a few instances, the OSM buildings data wasn’t up to date, so I edited the OSM dataset before merging on the exemption filing information. After that, I can toggle off the points layer and map buildings with extant rent control exemption filings. See below right image.

Step 4: Map Building Years

Next, I focus on the building year information in the assessment data. I need accurate information about building construction years in order to (1) identify exempt new construction within redevelopment areas, and (2) confirm that all exemption filings were filed in connection with buildings constructed after June 25, 1987, per the NCMD. Regarding (1), the NCMD and Section 260 do not define “newly-constructed” for purposes of identifying exempt buildings in redevelopment areas, but I think the best reading of that provision is that buildings are exempt when they were constructed after the redevelopment plan in question was approved by the council. Regarding (2), the county assessor only keeps information on building construction years, not months/days, so the best I can do is tag buildings constructed after 1987 as post-1987 (1988+ below), which may introduce some errors if exemptions were filed before the end of 1987, but there shouldn’t be too many of those cases to investigate.

The building years information needs some clean up. The data identifies buildings constructed between 1720 and 2025, which makes sense. However, there are about 1300 parcels with nonsense year information, i.e., values like “0800,” or unintelligible information, i.e., values like “0000,” “0001,” “0005,” “0021,” etc., which could either be nonsense, or could indicate buildings constructed in ‘00, ‘01, ‘05, ‘21, etc. Unfortunately nothing in the state user manual for tax data helps me figure out how to treat the unintelligible values. In an abundance of caution, I treat building year info in all of these cases as null. Where rent control status hinges on the year for these buildings, we’ll need to manually investigation them.

Beyond these 1300 instances, there are a large number of parcels (9.9 thousand out of 59.5 thousand) where the building year is unknown/already null. This isn’t ideal, but until we load up redevelopment area data, we won’t have a sense of how many 25+ units in these areas are missing year information.

One weird thing I notice right away is that some buildings with exemption filings appear to have been constructed before 1987, which doesn’t make a lot of sense (unless the building filed an exemption well after construction was completed, in hopes of pulling a fast one on the city/tenants—possible). These are not cases where the building was constructed within 1987 and miscoded. I’ll investigate these instances once the rest of the audit is up and running.

Step 5: Map Building Units

In an ideal world, I would have comprehensive data about the number of units per building. This field isn’t standard in OSM or the county parcel/assessor information. However, assessor information does include a field called property class, which is a standardized field to indicate types of uses. According to the state user manual, for the classification of taxable real property, the following codes are applicable:

6A Personal Property Telephone

6B Machinery, Apparatus or Equipment of Petroleum Refineries

15A Public School Property

15B Other School Property

15C Public Property

15D Church and Charitable Property

15E Cemeteries and Graveyards

15F Other Exempt properties not included in the above classifications

1 Vacant Land

2 Residential (four families or less)

3A Farm (Regular)

3B Farm (Qualified)

4A Commercial

4B Industrial

4C Apartment

5A Class I Railroad Property

5B Class II Railroad Property

According to the manual, code 2 represents residential properties with four families or less, whereas 4C indicates an “apartment,” which by exclusion is 5+ families. It is not clear whether residential buildings with four families or less is equivalent to residential buildings with four units or less, but in any event, this is the best proxy we have. So I used the parcel/assessor data to map all buildings with property class 4C (below).

At this point, this looked generally right to me. But there are some properties with exemption filings, which I know to be residential apartments, which appear to have non-4C class codes. For example, 120 Clifton Place is The Beacon—a mixed use building with dozens of residential units. But its current property class is 1, for vacant properties. See the building selected in yellow below. This is true even in the most up to date assessor information through the county portal. Similarly, some big residential buildings downtown, like Portside Towers, aren’t 4C but are instead are coded 4A, or “commercial.” Just another thing to investigate when we get everything up and running.

Lastly, while the 4C code identifies 5+ unit residential buildings, that’s only half the battle; we still need to identify 25+ unit residential buildings located within redevelopment plan areas. There are no codes in the tax assessment data that comprehensively report the number of residential units (otherwise I would have started there). However, where the property class is 4 (such as 4C), there is a separate “class 4 use code” with three relevant codes: 020 for “APT - GARDEN”; 021 for “APT - HIGH RISE”; and 029 for “APT - OTHER.” High rise is most like to be 25+. But garden apartments, where multiple residential buildings in a complex surround a garden, could also be 25+ units, and so could “other” for that matter. Ultimately, newly constructed 4C properties in redevelopment areas may be rare—rare enough to investigate manually one by one—so I’ll put a pin in that for now.

Step 6: Map Redevelopment Plan Areas

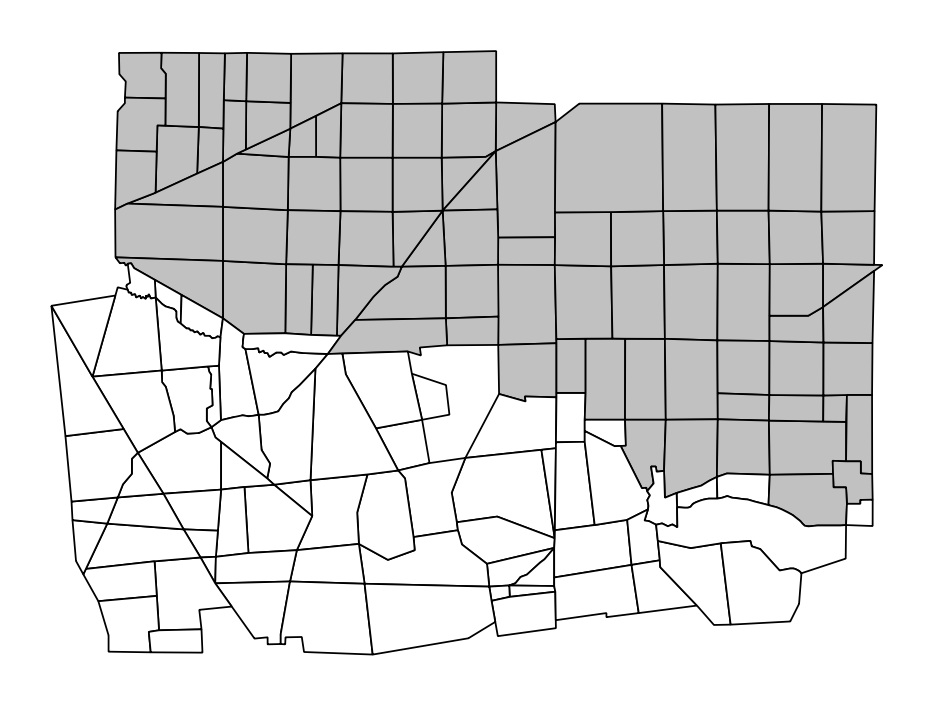

Next, I will need shapefiles for redevelopment areas, to identify every new building in one with 25+ units. Apparently there are 104 areas, according to the latest zoning map. Unfortunately, the underlying shapefiles are not available through the open data portal—there is one quite old file geodatabase on there but it is corrupted. But there is a web ArcGIS viewer with zoning and redevelopment plan information. Like I did to get parcel data from the county, I looked at the layer metadata, found the GIS REST service URL, and used the “Add ArcGIS Feature Server Layer” feature to connect this service layer and visualize it. Fortunately this file had fewer than 2000 objects, so I didn’t need to use a script to perform multiple queries. Once I loaded up the zoning information, there was a simple field called "redev area" which I used to query shapes of the 104 redevelopment plans and map them (turning off the building layer to keep things from getting too crazy).

This is the part where it got messy. I attempted to use a spatial join to automatically capture parcels that fall within redevelopment areas, but I hit two snags. First, the parcel data and redevelopment plan data aren't perfectly, precisely drawn, so capturing parcels exclusively within plan areas is very underinclusive of parcels of interest, and capturing parcels within or intersecting plan areas is highly overinclusive. Second, I kept getting errors for “invalid/complex geometries” in the parcel data because there are all sorts of geometry issues like non-connecting boundaries and vortexes. Given then, I selected by location to find parcels in each plan area, did an exhaustive manual data check, and eventually tagged those parcels with their plan area name. Then I merge plan area information onto those parcels tagged within a plan area. Conveniently, for each redevelopment plan, there is an "ADPT_DATE" field that indicates the date the plan was first adopted. Now I can map parcels within redevelopment plan areas:

Finally, I can map this information and display (1) parcels within a redevelopment area and (2) the subset of those parcels that were constructed after the plan adoption date, i.e., that might be exempt. Where parcels are missing building year information, I treat it as being in the former category. Unfortunately, I still don’t have information on the number of units per building to identify those with 25+, which are the ones that should actually be exempt, so I’ll need to manually investigate those at the end.

Step 7: Map Public Housing Developments

Lastly, I need to map low rent public housing developments. To start, I made a table of public housing developments, by referencing the Jersey City Housing Authority website. Then I used the block/lot information to merge that table onto parcels, and symbolize parcels by public housing status:

Next & Final Steps

With all that done, the data is nearly prepped. The few last pieces to run down are: (1) an OPRA response to confirm there are no new filings post 2022; and (2) how to identify 25+ unit residential buildings within the redevelopment plan areas (or buckle down and go through them manually). And then it’s time to get this up and searchable.